-

What We Do

Architecture, Engineering & Construction Services

Products

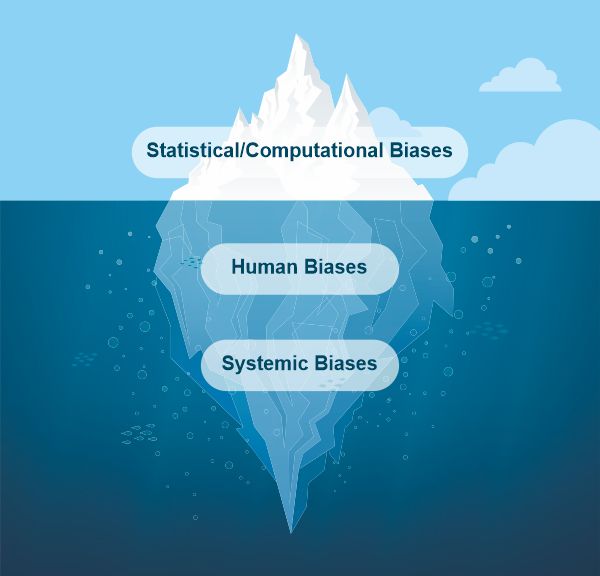

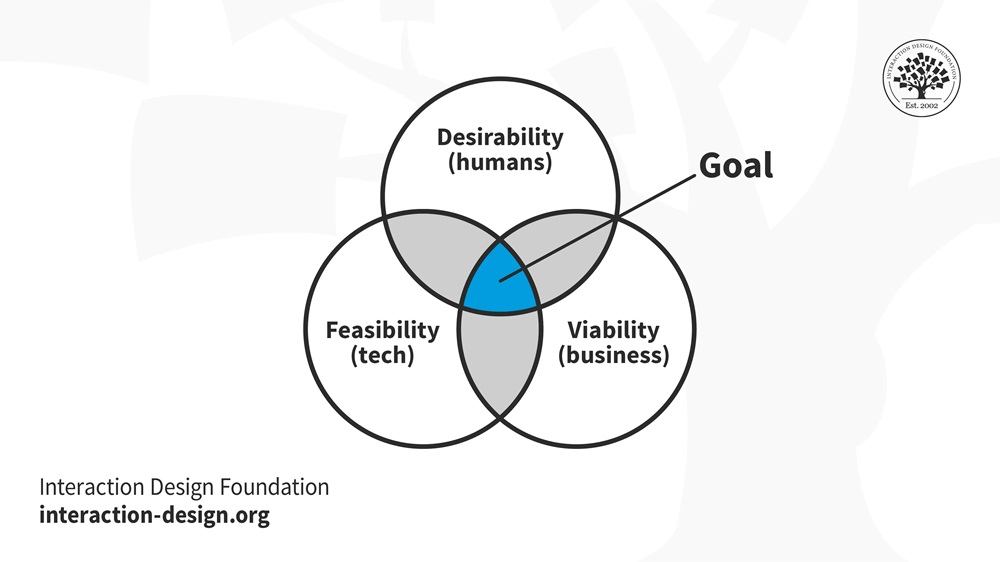

Artificial Intelligence

Data Analytics Services & Solutions

- Enterprise Data Management

- Big Data Services

- Data Science

- Database Services

- Business Intelligence & Dashboards

- Data Integration Services

Automation Services

Software Testing Services

Product & Application Development

Animation

Other Animation Services

- Freight Bill Processing

- Freight Audit And Payment Portal (FAP)

- Invoice Processing

- Air WayBill Processing

- Freight & Cargo Bill Of Lading Processing

- Inland Freight Bill Auditing

- Ocean Freight Auditing

- Freight, Truck & Cargo EDI Implementation

- Purchase Order Entries

- Carriers Insurance Document Management

- Freight, Truck Rate Contract Management

- Tracking & Tracing Status Updates

- Freight Weight Reconciliation

- Driver Log Management

- Freight Rate Reconciliation

- Bill Of Lading Drafting

- Customs - Discharge List & Manifest Creation And Submission

- Customs - Bill Of Entry Submission For Import/Export

- Customer Support Services

- Freight Forwarder Import And Export Operation Services

- Carrier On-Boarding Document Verification & Reference Checking Process

Media Monitoring

Tracking Services

- About Us

- Insights

Vee Technologies’ extraordinary expertise leads to remarkable results.

We share insights, analysis and research – tailored to your unique interests – and make case studies and whitepapers to help you deepen your knowledge and impact.

Product Engineering Solutions

- Leading manufacturer of Residential/Commercial Lawn Mowers with a historical record

- A leading fire truck vehicle manufacturer enhances design and development with Vee Technologies' comprehensive design Solutions

- Explore More...

AEC

- Careers

- Newsroom

- Contact Us